Or... it doesn't.

Researchers working for the state of Texas conducted a reassessment of the 2012 maternal mortality records. Researchers hypothesize that data entry errors led to records being inaccurately classified as maternal deaths. Knowing, as we do, that maternal mortality reporting has some considerable data accuracy challenges, this seems on the surface to be a good faith effort.

That said, I have concerns with the methods in the Texas analysis (explained in more detail below). In addition, while the authors do a nice job of stating the limitations of their work--data are not comparable to other years or other locations--the news media did exactly the opposite: compare to other places and times.

In 2012 Texas had 147 mortality records with an ICD-10 code indicating maternal mortality (codes A34, O00–O95, or O98–O99). Texas researchers used record matching and extensive death and health record review for the 147 maternal mortality coded deaths. Through this process the researchers identified birth or pregnancy status (within 42 days) at the time of death. This extensive review found a number of false positive results. Researchers then removed these deaths from the maternal mortality count. On this point, the analysis seems both reasonable and robust.

However, any analysis of data coding errors should clearly identify both false positives and false negatives. The search for false positives was (as described above) robust. The search for false negatives, on the other hand, used record matching alone. This may seem a minor point, but it is important because the robust methods used to find false positives were not similarly applied to find false negatives. Moreover, the record linkage process matched on SSN, name, and county of residence. Given their hypothesis of data entry errors, finding exact matches for all three of those open-ended data entry fields raises all sorts of possibilities for missed matches. In other words, the methods introduced potential bias in favor of finding false negatives and against finding false positives.

I recognize that individual case review for 9,000+ death records was probably implausible due to time and funding constraints. Still, more could easily have been done to try and identify false negatives. For example, they might have added a record linkage between all birth records and all death records.

And... Here's the piece that puzzles me most...

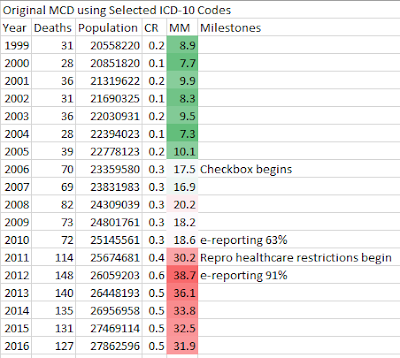

The Texas researchers posit (repeatedly) that the number of false positives is some artifact of newer data entry techniques. They state, specifically, that the upswing in the reported maternal mortality rate was driven by an increase in e-reporting:

"The percentage of death certificates submitted electronically increased from 63% in 2010 to 91% in 2012"But... if electronic reporting was the problem, why wouldn't the problem have shown up in 2010 when 63% was already e-reported? Why do they think an incremental 28 percentage points was pivotal when first 63% was not? And, perhaps most importantly, why do they skip over 2011 when that year (not 2012) was the pivotal year for the increase in reported maternal deaths in Texas? (2011 was also the year TX began restricting family planning and reproductive health services.)

|

| Texas Maternal Mortality Trend Source: CDC WONDER, Multiple Cause of Death database, and natality database Note: CDC reports 148 deaths in ICD-10 codes A34, O00-O95,O98-O99), TX reports 147 |

No comments:

Post a Comment

your insights?